Although ARIMA is quite good at making predictions for time series data. It's pretty time consuming if you want to train multiply series. For this project sepcificly, we need to make 60 days' predictions for 145063 series. Imagine how long that's gonna take. Training and predicting 60 days for 1 series takes about 1 min. Then for 145063 series, it will take 145063/60/24 = 100 days!! Time to seek for solutions from deep neural nets...

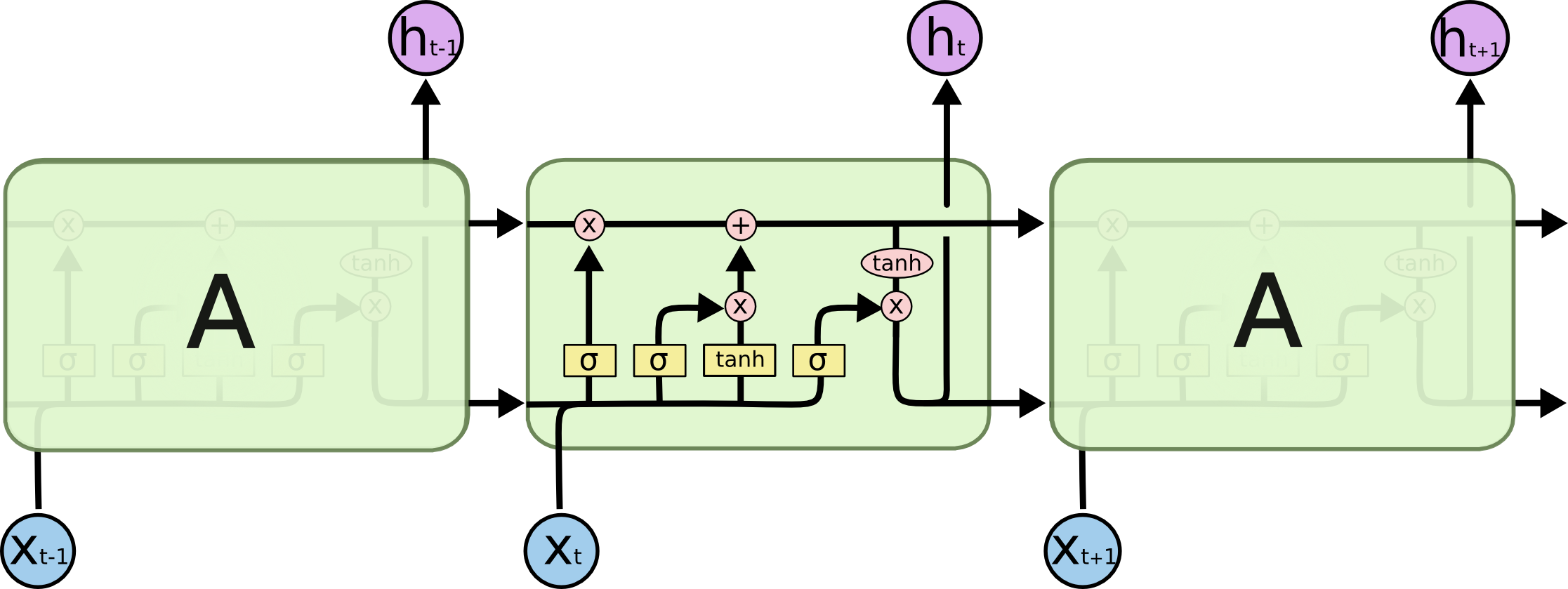

I'm sure we know the looped structure of RNN. LSTM has similiar structure and if you unroll the loop we will get the structure like below with several gates within the cell. The left sigmoid layer acts as the forget gate, it decides how much we want to forget in previous timestep. The middle two (a sigmoid and a tanh layer) are for deciding what to update. These two gates combined decided the information being updated and passed to next step. Then, the right two (a sigmoid and a tanh layer) decides the output for this step. More details about structure of LSTM please check colah's blog!

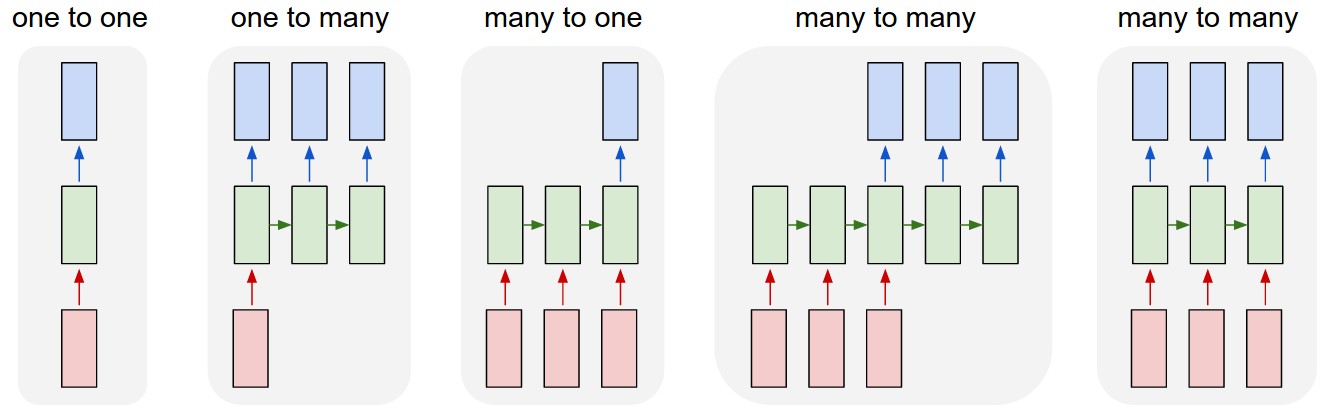

RNN/LSTM are good at extracting patterns in input feature space. And given LSTM’s gated architecture, it has this ability to manipulate its memory state. LSTM is also very flexible in that it has several combinations of sequence to sequence models—many to one: predict at current timestep given all the previous timesteps; many to many: predict multiple future time steps at once given all the previous timesteps; and several other variations. The model I'm using here is many to many model.

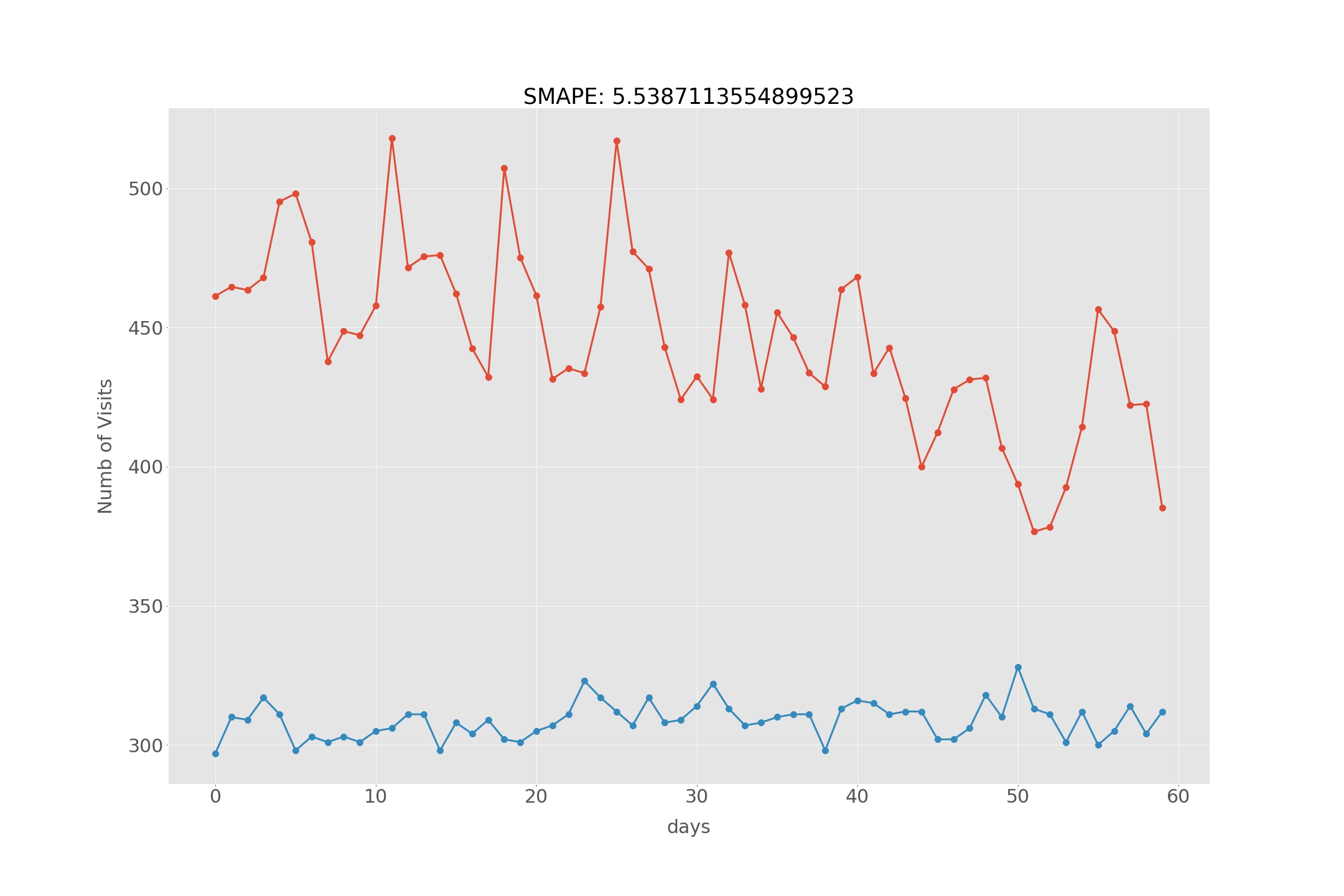

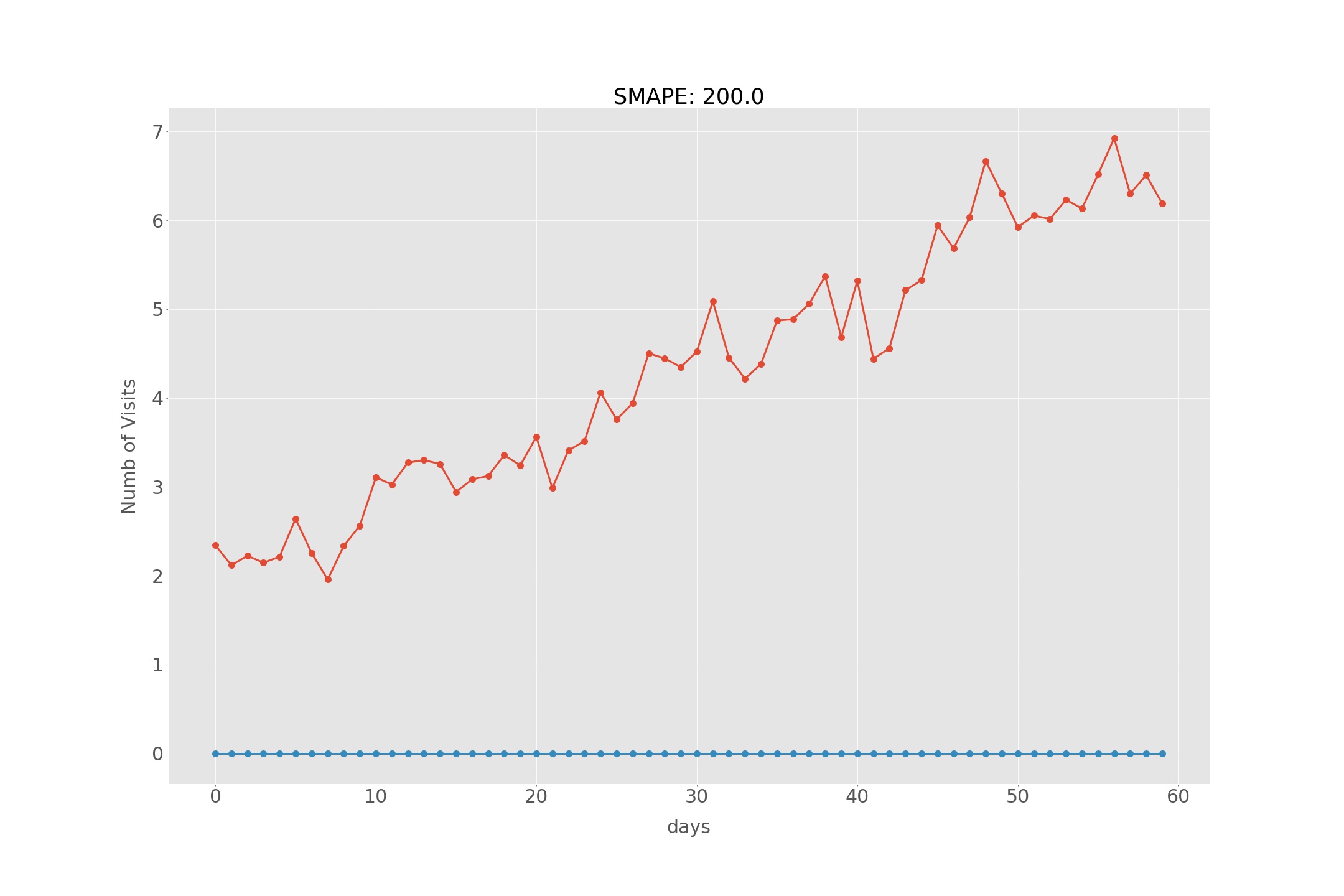

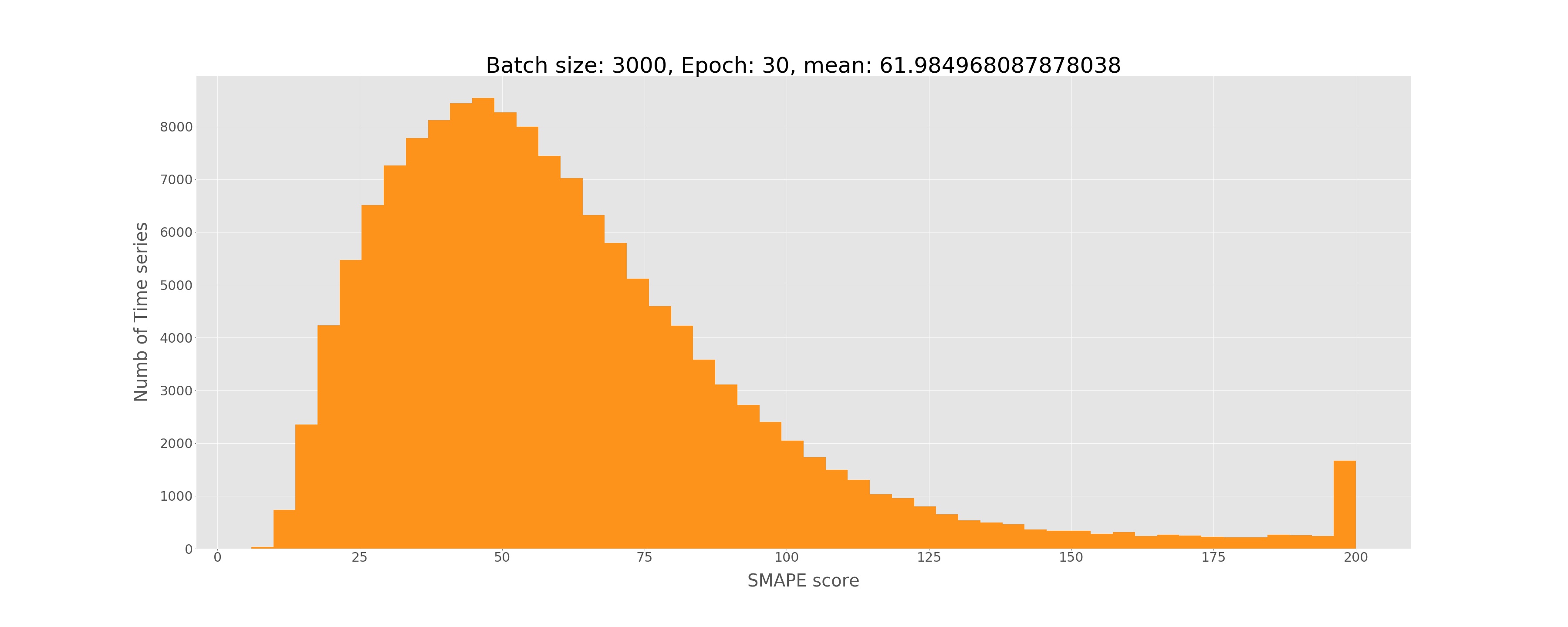

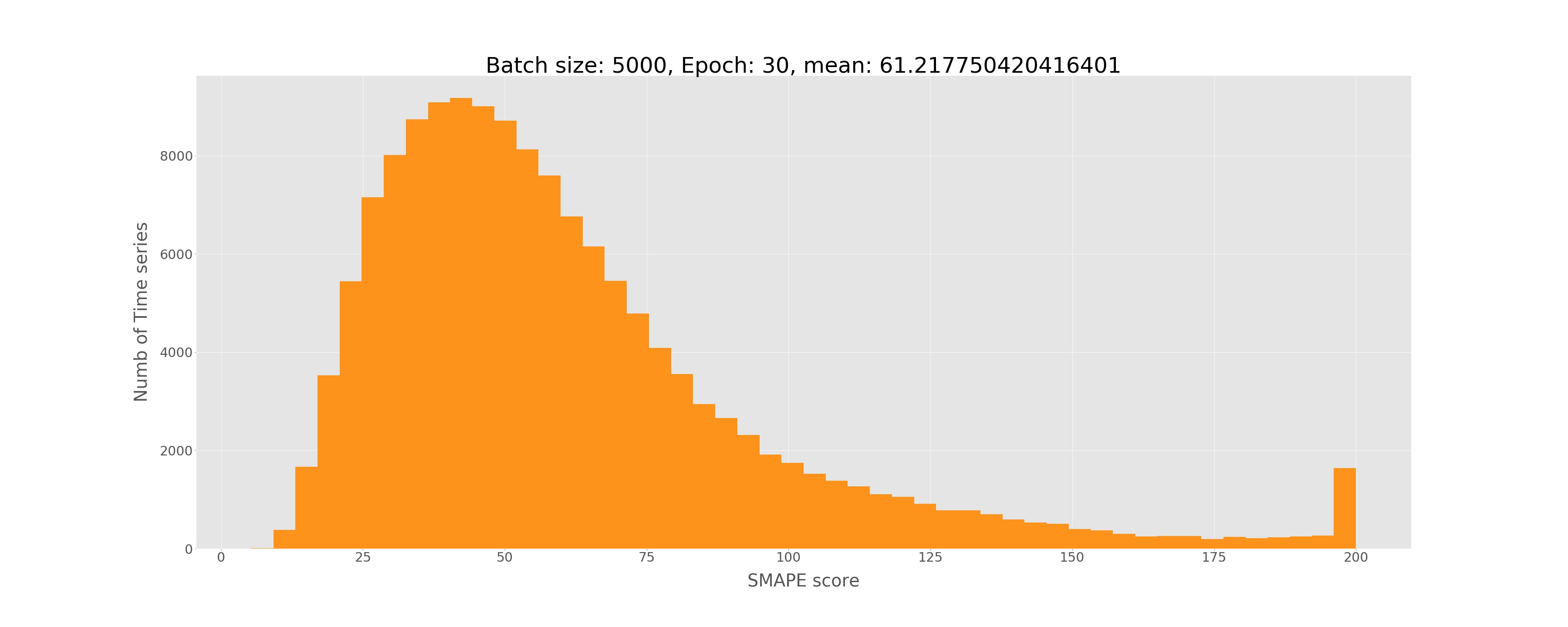

Well... not as good as ARIMA sadly 😢. Luckily, I was able to run some experiments.

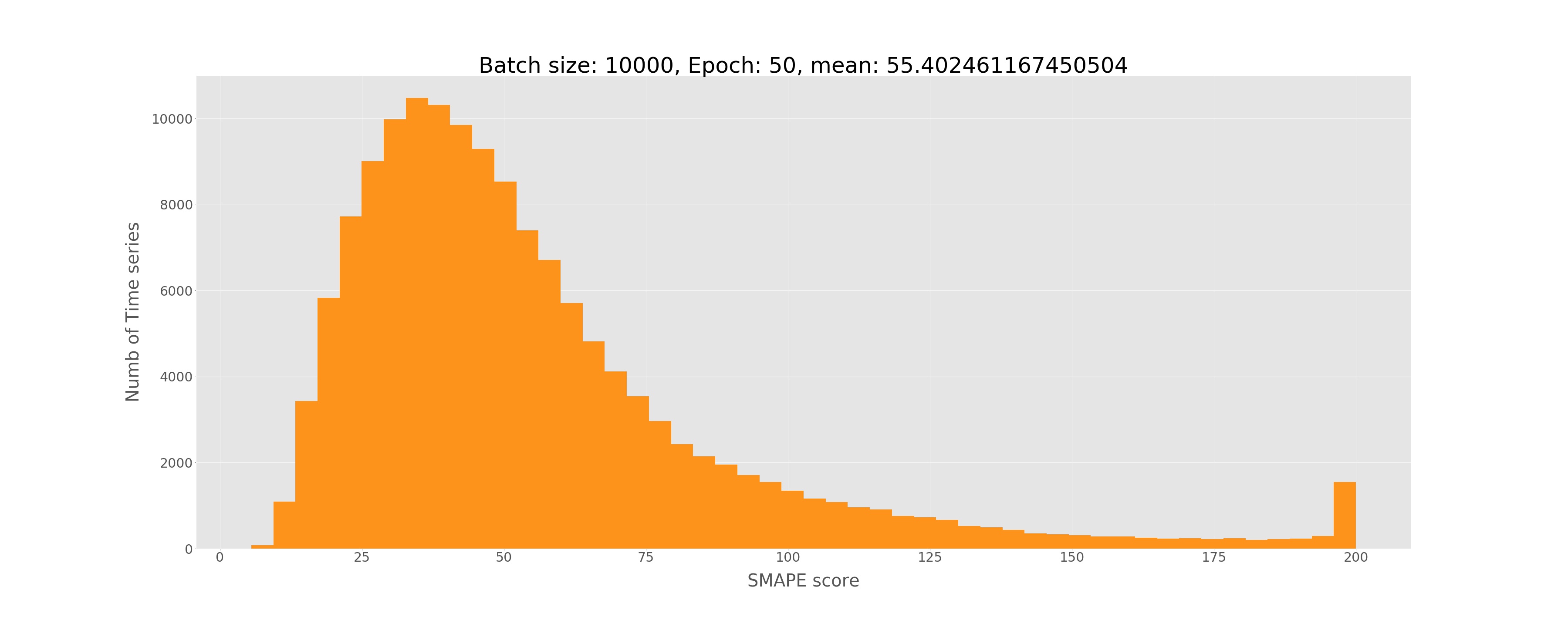

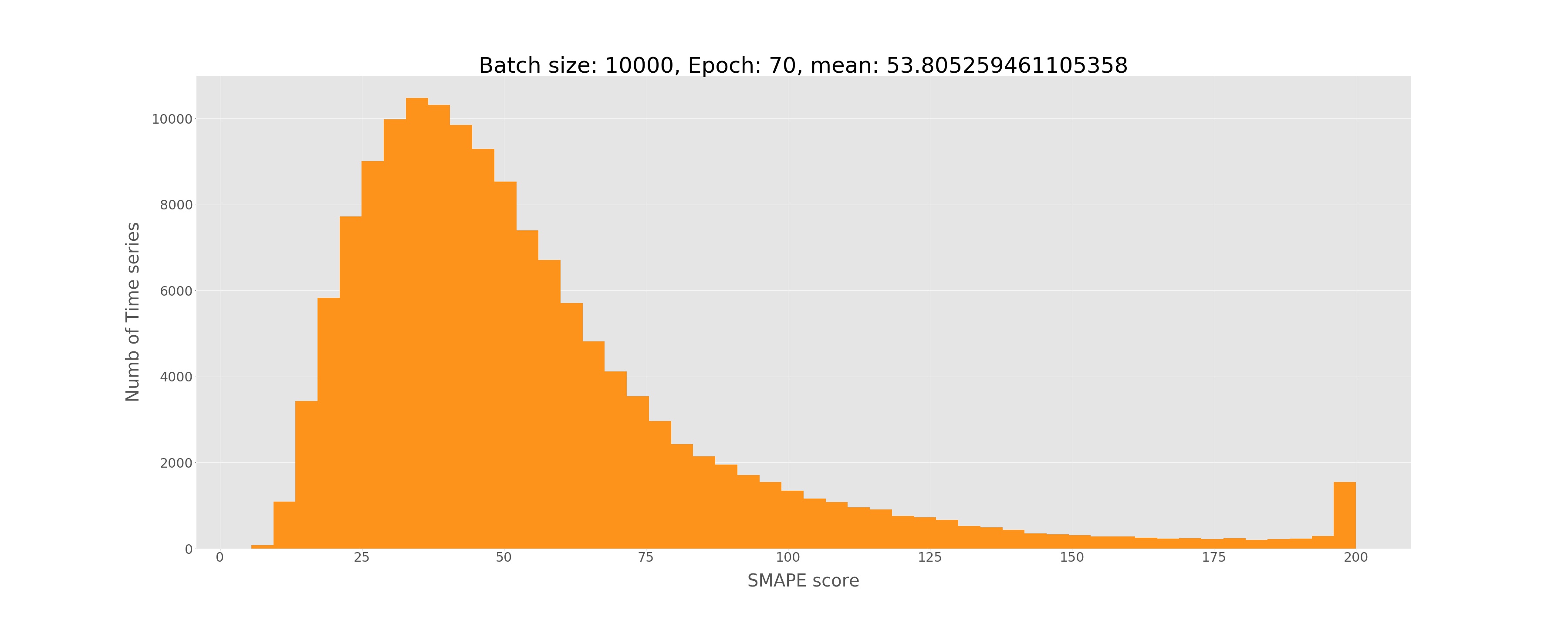

Only increasing batch does not seem to be helping in any way... If we increase both batch size and number of epochs...

Ok let's what good and bad prediction look like: